Engagement Bait Isn't Just A Problem On Threads - It's Everywhere

Rewarding "engagement" above everything is turning social platforms into toxic hellscapes

Engagement bait isn’t new. It’s been a problem on social for years but on Threads its reached an ugly peak that makes the site unusable. In this #NoSmallTalk, we explore how engagement powers social platforms and suggest that, as a metric, it’s doing more harm than good.

Threads has an engagement bait problem. If, like me, you have migrated there to escape the cold nuclear winter of Twitter, you can’t help but notice it - that is besides the bland, PG-13 vibe, as if you’d just loaded up the digital equivalent of an office birthday card where everyone is trying really hard to write something witty but all end up sounding the same. Putting the lame atmosphere aside for a moment, the level of brazen engagement baiting is some of the worst I’ve seen - and I worked at UNILAD! But what exactly is it? Well, it’s stuff like this:

This user recently challenged himself to get a billion views on Threads and managed impressive numbers:

But what value are you offering to a user if your feed is just incessant bait, with no sense of authenticity, personality or usefulness. And what value those numbers if you can only achieve them by resorting to misogyny-bait like this:

I’m purposely not linking to his account because I don’t want to give him any more attention but his page is indicative of the current state of Threads. Even Adam Mosseri recently admitted they have a problem.

There’s even engagement bait about engagement bait, suggesting some kind of infinite regression that will at some point break the simulation we’re all currently living in.

Shit posters are satirising it by purposely posting rage bait, with reporter Katie Notopolous’s Thread being truly inspirational - drawing attention to how problematic and empty it all is.

But we can’t just blame the engagement baiters

The level of engagement baiting speaks to a larger problem that all platforms have - and something that’s unfortunately baked into their business models - the rewarding of “engagement” above all other metrics.

It’s not just Meta platforms - Linkedin is a big culprit too. While I’ll allow that there’s often more substantive discussions happening in the comments on Linkedin, it’s a lot of bait-y hacks, listicles and carousels with headlines like “[Insert Sacred Cow] Is Dead” or “If You’re Not Doing This One Thing Then You’re A Failure & An Embarrassment”. The end result is usually some corporate backslapping or fawning discussions about how impressive Steven Bartlett is, giving the general impression of a very measured, fake experience - so pretty much the the vibe of most workplaces.

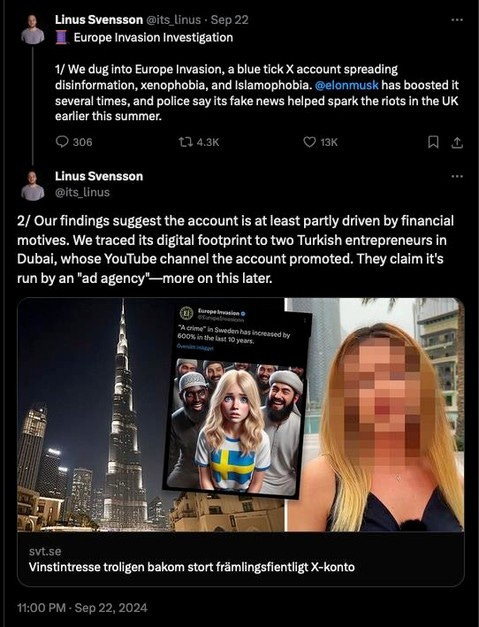

But elsewhere online, the relentless prioritising of engagement is helping worse people than Steven Bartlett. Particularly on platforms like Twitter that have revenue sharing which incentivises bad actors to simply post the most inflammatory, racist content for cash. Reporter Linus Svensson, in his investigation of Europe Invasion - a blue-tick account posting inflammatory material shared by Elon Musk - discovered that the account was run by two Turkish entrepreneurs in Dubai and driven by financial motives. That account, and others, were linked to the disinformation that resulted in race riots across the UK after the attack in Southport. It used to be that you’d have to become a Russian operative to get paid for misinformation, but thanks to the free market economics of Elon’s X you don’t need to!

That is the tip of the iceberg with how hate is monetised on Twitter but it’s the logical endpoint when two things happen - engagement by any means is rewarded and, crucially, content moderation is removed.

In what other ways is the engagement engine affecting users?

Platforms use engagement as a metric and gear the system towards it (above more substantive metrics) because it doesn’t discriminate between good comments or bad comments, a helpful user experience or a hateful one. They really do not care about how damaging or inflammatory the content is, they really just want your attention. Encouraging engagement by any means is how they keep you on the page - and they’ll structure their entire platform to do that. Consider the structure of TikTok, with infinite scroll and short form video, they’ve basically made a perfect engine for engagement giving rise to issues with misogynic and racist content. Not to mention disinformation, conspiracy content and dumb trends.

But beyond that, I worry personally about the long term impact of engagement-prioritised social platforms on mental health, especially for young people. We’re also seeing the rise of conditions like Popcorn Brain, a phenomenon that is radically affecting concentration. The other sad truth is that too much social media use is a genuine addiction. We’ve all seen a friend, partner or family member struggle to pay attention to a conversation or watch a TV show without looking at their phones. (This isn’t only the fault of social media engagement - blame can also go to our always-on work culture, facilitated by apps like Teams, Slack and weak protections for the right to sign off). The platforms have essentially set up machines that feed users the most dopamine-spiking content leaving people hopelessly addicted and scrolling endlessly. It’s the digital equivalent of Doritos but instead of Tangy Cheese, the flavour is Misogyny and Race Hate. This is an increasingly alarming public health issue and sooner or later, we must deal with how engagement-powered social media platforms cause users to experience addiction, radicalisation and terrible mental health.

How will AI affect the current system?

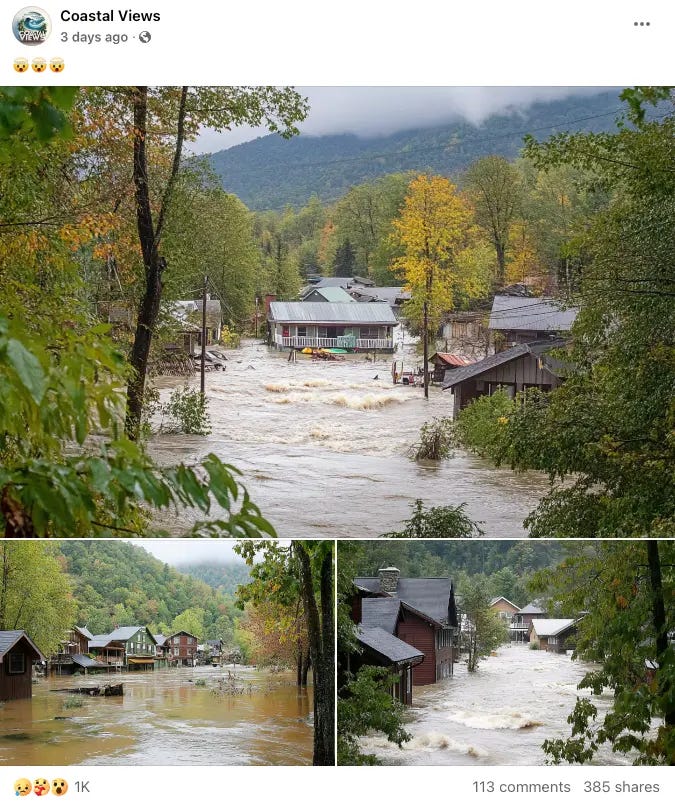

The future of our engagement-obsessed social landscape looks bleak when you throw the coming flood of low-quality AI slop into the mix. Its been reported in Futurism that over on Facebook, unscrupulous users like the account Coastal Views are creating faked imagery of Hurricane Helene as engagement bait to drive revenue by linking to Etsy shop fronts where they sell AI-generated prints. With no moderation of AI-generated content expect AI slop to ruin your favourite platform soon - if it hasn’t happened already.

I’ve seen something of an explosion of AI-slop on Youtube - cheaply made video essays that use a combo of generative-AI images, AI voiceover and licensed material to make super low value, often incorrect content that attempts to mimic higher quality creators. As an artistic endeavour, I’ve yet to see a really compelling use of generative-AI, so the current value of generative AI on social media is for unscrupulous creators to make cheap content that baits engagement, and captures attention away from other creators who have worked harder to secure their audience by virtue of, you know, actually creating the content.

But if engagement was deprioritised and less rewarded as a metric, it’d disincentivise lazy scammers from making content in the first place - why do it if you can’t get paid or cheaply grow a page? It could also shift the focus away from AI-generated content towards more substantive and interesting uses for it - for example we could train AI to assess content along parameters like quality or usefulness and therefore moderate content at a more rapid rate than is currently available.

Maybe it’s time to ditch engagement?

I’m not just making a case for social media marketers to prioritise other metrics for clients - though that is a valuable start. I mean broadly as users: if how platforms currently reward engagement creates the conditions for the spread of low value bait or hate-filled inflammatory content - that actively pollutes our discourse - then why even use it? It creates a shit environment, not just for users but also for brands wanting to advertise on platforms. That’s why nobody is advertising on Twitter. For community managers, engagement farming tactics may inflate their numbers in the short term but result in hollow interactions that do little to foster genuine connections with their audience. It comes across as inauthentic, “in essence, chasing engagement at any cost can compromise a brand’s authenticity and credibility” - and still not translate to any sales. Engagement looks cool as a metric but if there’s no distinction between whether it’s negative or positive - and if it doesn’t translate to meaningful action like clickthroughs or sales, then what’s the point?

So what can be measured instead of engagement for a better online experience?

The answer for marketers is reach, impressions and, crucially, conversions - as they’ll give a more detailed breakdown of how their audience is deriving value from their page, how well they’re building their community and how they’re driving sales. But marketing is only part of the story and simply ignoring the way platforms reward engagement isn’t going to go very far in addressing the problem.

A report commissioned by Pinterest looked at how they can incorporate Non-Engagement Signals as supplementary metrics to create a better experience e.g. less clickbait and inflammatory content. They’ve drawn up a Field Guide for platforms to incorporate that ask for things like in-app user surveys and better content labelling (tell us what content you like/don’t like at signup and we’ll weight that when showing you content). Pinterest’s research also suggests that using Non-Engagement Signals actually achieves better long term retention of users, albeit at the cost of short term losses in engagement - due to a devaluing of engagement bait. However, this still only really addresses the issue on the level of the individual user and could exacerbate the problem of echo chambers - but if a detailed in-app survey was made mandatory at sign-up, you could hopefully shift the dial on what kind of content would be then made to serve that audience.

Though it’s not just on the user, platforms themselves have a responsibility to combat the way their platforms are being abused to promote hate speech and disinformation so ultimately there could, and should, be regulatory pressure placed on platforms to adjust their algorithmic weighting of content to disincentivise hate speech, disinformation and to promote those who make high quality, verified, entertaining content. The content moderation systems used by platforms flag content for various infractions - racist language, homophobia etc. But what if we widened the scope of content moderation to appraise content along other lines too: is this informative, is it high quality information, is it artistic - and then reward content that fulfils that criteria. Machine learning could be trained on the content and used to assess its quality - with ultimate responsibility being overseen by human assessors. The hope would be that, by downgrading engagement and introducing controls around quality, the world beyond engagement would produce social media platforms that place human well being at their heart by championing quality, artistic content that enriches our digital experience. That’s something worth engaging with.